Let us calculate!

Let us calculate!

— Gottfried Leibnitz

I admit it, I’m rather a Utopian.

Perhaps I’ve been thinking all this time that it should be possible to find a reduced set of words, symbols, or even concepts that could serve as a basic core of human expression and being, some kind of fundamental proto-language that might cut across all cultures and yet connect all individuals. Something to undo the “Tower of Babel” and be able to heal all misunderstandings, resolve all disagreements, and find everyone’s common ground. I see now I have fallen down the “perfect language” rabbit-hole.

Of course, along with our imperfect languages we also have to deal with our imperfect thoughts and our imperfect feelings. Not only do we want to hide what we’re really thinking and feeling from others, we want to hide it from ourselves. Is it because we don’t want others to know the weakness and darkness within us, or we don’t want to face those parts of our own identities? Perhaps that is the main problem with language, the ease with which we can lie to both ourselves and others, and our eagerness to accept these lies.

Psychology is supposed to help us understand ourselves better. But before that, there were the Tarot decks, Ouija boards, and the I Chings that were supposed to illuminate our thoughts and actions, and help us perceive, however dimly, a little clearer into the past and future. I’m sure I’m not alone in thinking that such devices merely bring concepts to the forefront of the conscious mind and allow one to engage in creative and playful thinking. Maybe they tie into the “unconscious”, whatever that really means, and if not, then what is the source of their utility?

In the same vein, there are other instruments purported to aid in the effort to know thyself, such as Astrology and Myers-Briggs. Astrology has also been used for divination and that is its popular and sad ubiquity, that is “your daily horoscope”. Myers-Briggs is popular in the business world to help the managers manage and to resolve conflicts, and takes itself more seriously. In my foolishness, even though I didn’t believe that there was a perfect language lost in antiquity, perhaps I thought I could invent one anew like Ramon Llull or Gottfried Leibnitz!

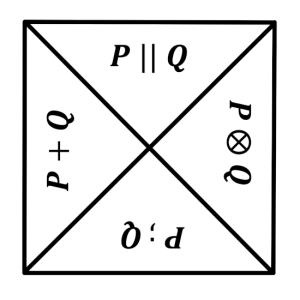

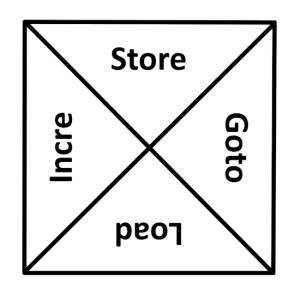

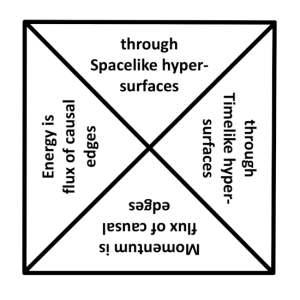

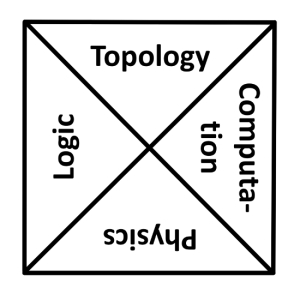

Does language reveal reality or does it mask it? Can it blend and synthesize different realities or can it shape and create the very reality we inhabit? I’ve been mulling over the idea of what the next combogenetic alphakit might be, after chemical-molecular, biological-genetic, and symbolic-linguistic. Could it be something hyper-linguistic or hyper-cognitive, to serve as a perfect language, melding syntax, semantics, pragmatics? Or could it be something completely different, a blending of mathematics and philosophy?

Or even a new type of computer science? Such studies are still in their infacy, so one hopes for future breakthroughs and grand theories of logical systems and (e)valuations. Could a machine that creates reality from mere thought be the perfect language we seek, one that performatively produces no ambiguity by changing the abstract into the concrete, the inner into the outer? The Krell machine in the movie Forbidden Planet was one such hypothetical device, and showed the folly of a scheme that granted god-like powers to mere mortals.

Possibly better is a system that starts from grounding axioms that are so simple and fundamental that all must agree with their basis, utilizes logics that are so straightforward and rational that all must agree with their validity, demonstrates proofs that are so rigorous that all must agree with their worth, all enabled by overarching schemas that allow the truth of all things to vigorously and irrefutably shine. Even then, humankind might be too weak to suffer the onslaught against its fragile and flawed cogitations.

But O, what a wonderful world it might be.

Further Reading:

Umberto Eco / The Search for the Perfect Language

The Arcane Arts of Ramon Llull : the Dignities

Combogenesis: a constructive, emergent cosmos

The Myers-Briggs Type Indicator

The Twelve Houses of the Zodiac

The Tempest and Forbidden Planet

The 64 Hexagrams of the I Ching

https://en.wikipedia.org/wiki/Hedgehog%27s_dilemma

[*11.132]

<>

I’ve long wished for a succinct definition for the notion of information. What is it? What makes something information and another thing disinformation? Why do people accept things as truth that others know as false? Is information something that informs the physical universe, or is it only a way that the human mind tries to structure itself? The nonhuman world seems to get along just fine without knowing anything, doesn’t it?

I’ve long wished for a succinct definition for the notion of information. What is it? What makes something information and another thing disinformation? Why do people accept things as truth that others know as false? Is information something that informs the physical universe, or is it only a way that the human mind tries to structure itself? The nonhuman world seems to get along just fine without knowing anything, doesn’t it?